TAMING THE EMAIL MONSTER IN ONLINE BASIC COMMUNICATION COURSES

SCHWARTZMAN, ROY

UNIVERSITY OF NORTH CAROLINA AT GREENSBORO

COMMUNICATION STUDIES DEPARTMENT.

Dr. Roy Schwartzman

Communication Studies Department

University of North Carolina at Greensboro.

Taming the Email Monster in Online Basic Communication Courses

Synopsis:

Much of the information overload in online courses stems from the volume of student emails. Controlling for instructor and course content in an online basic oral communication course, this study demonstrates how recursive analysis of thematic content in student emails enables course redesign that dramatically reduces the quantity and improves the quality of incoming student messages.

Taming the Email Monster in Online Basic Communication Courses

Roy Schwartzman, Ph.D.

University of North Carolina at Greensboro

A spate of studies has raised reservations about the wisdom of hastily embracing online course delivery. The litany of lamentations about web-based and web-augmented general education oral communication courses strikes a recurrent refrain. Supposedly instructional workload increases from the onslaught of emails, community withers in the limbo of cyberspace, and student learning outcomes approximate the lackluster “no significant difference” versus more traditional instructional formats (Russell, 2001). Do these concerns represent genuine shortcomings of online communication pedagogy, or do the complaints reflect a limited perspective and problematic implementation of computer-mediated instruction? This essay strongly suggests that at least one major objection to online and web-supplemented basic communication courses can be avoided entirely or “designed out” of the course if it arises. Fundamentally, some objections to online course delivery reflect faulty course design rather than inherent limitations of the online medium.

This study examines how a systematic analysis of the prevalent themes within student emails could diagnose ways to clarify course content or alter its mode of delivery to reduce inquiries and complaints from students. Teaching in an electronically saturated environment plunges instructors into a communication cycle that fuels information overload: messages become easier to concoct and disseminate, but this speed of production outpaces the ability to process them. A primary culprit, especially in fully online and hybrid traditional (i.e., class sessions supplemented assisted by learning management systems and tools) courses, is email. Incoming messages from students seem to proliferate, which in turn stretches the instructor’s capacity to respond fully to each student.

Overview of the Online Instructional Challenge: Email Proliferation

The sheer volume of incoming email from students constitutes a major challenge for instructors of online courses. Many studies (e.g., Lee, 2004) express concern that online instructors quickly become inundated with incoming email messages that distract from focusing on course content. The excessive time expenditure has been documented. Cavanaugh’s (2005) analysis of time expenditures by online instructors finds that the average number of personal email replies in each of the ten courses studied was 300-600, requiring an average of 36 hours of each instructor’s time during the academic term. Kearns and colleagues (2014) surveyed 65 experienced online instructors—87 percent had taught online for at least three years. Even for these seasoned online teachers, “Overwhelmingly, survey respondents identified the volume of incoming messages as their biggest challenge in managing e-mail” (p. 24). The intimidating burden of triaging, sorting, and responding to these messages also can deter instructors from teaching online, thus potentially inhibiting an educational institution’s ability to extend its outreach further into the electronic environment. A shortage of instructors willing to teach online could reduce opportunities to recruit prospective students and extend the outreach of the institution.

Aside from sheer quantity, inefficient use of email can waste valuable time. One efficiency expert estimates that each small business in the United States squanders an average of 2,000 hours yearly through inefficient handling of emails (Productivity, 2012). That number undoubtedly skyrockets as the size of the organization and the scale of communication increases. Much of the added time that faculty report as necessary to teach online as opposed to face-to-face stems from the time expenditure associated with email communication. A study of eight fully online course sections in three different academic departments reveals that email was less efficient than threaded discussions or chat sessions (Spector, 2005). The same study finds that the added time to prepare and administer online courses does not correlate with improvements in quality. Summarizing the cumulative findings of several studies tracking instructional time expenditures for online coursework, the study notes that “online teachers invest more time in online teaching than in face-to-face teaching. This time generally does not result in improved learning nor is it generally recognized in the form of additional faculty income or other faculty benefits” (Spector, 2005, p. 17). Clearly, the excessive time devoted to emails in online teaching is unproductive. Quantity of messages sent and received does not yield gains in educational quality.

The comment about labor of emails not generating more faculty acknowledgment or rewards deserves additional attention. Schwartzman and Carlone (2010) observe that much labor involved with online teaching remains private and relatively unobserved. Instead of the easily noticed classroom populated with students and instructor engaging in public dialogue, the online instructor toils privately, often off campus, engaging in comparatively invisible labor that easily falls through the cracks of standard observational tools such as peer observations of teaching. Greater efficiency in email communication can enable the online instructor to reallocate that labor to tasks more directly tied to positive student outcomes, such as providing more extensive feedback on assignments or designing more interactive course components.

Improving the efficiency of email communication by no means implies a reduction in student interactions. Indeed, a large body of research confirms that for fully online courses email “enhances the learning experience by building on the learner-instructor relationship” (Robles & Braathen, 2002, p. 44). Canvanaugh (2005) cautions that reducing email communication could harm course quality by reducing student-teacher interactions. Since email represents one of the most personal, individualized ways the instructor and student can communicate, the objective should not be simply to attenuate this means of interaction. In their detailed literature review of nine scholarly articles focusing on fully online nutrition education courses, Cohen, Carbone, and Beffa-Negrini (2011) observe that the high time demands associated with online teaching call for “time-efficient techniques” that would not reduce instructional effectiveness (p. 85). The key issue at hand becomes how to concentrate email communication on these personal, course-related interpersonal connections rather than more superfluous correspondence such as responses to questions that the instructor assumes have been answered already on the syllabus or on the assignment guidelines.

Method and Results

A thematic content analysis was conducted on all incoming student emails (n = 249) in a fully online section of the introductory basic oral communication course that covered public speaking, interviewing, interpersonal communication, and group problem-solving. The course was a general education requirement for all undergraduate students at a small (enrollment ~6,000) mid-western university in the United States. Nineteen students were enrolled in this section. The themes of all the incoming student emails were categorized, as shown in Table 1.

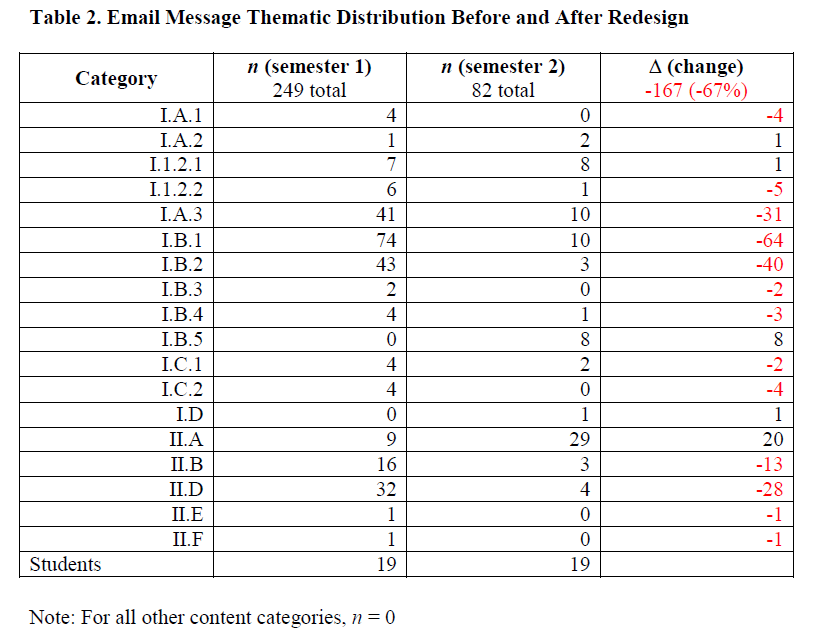

The distribution of the themes is recorded in Table 2. The distribution of themes revealed where messages were concentrated in areas that were amenable to redesign or clarification. The content analysis pinpointed what kind of information proliferated, thereby suggesting how it could be managed. Themes relating to usability of course components, navigational confusion, technical challenges, and difficulty in locating content were identified as actionable items to guide redesign.

Rather than merely document complaints about the volume of messages, the emails were treated as guides for course redesign to pre-empt questions about course tool functionality or where to find material. This employment of recursive feedback—continuously adapting the course in response to observable student reactions to the course components (Okita & Schwartz, 2013)—yielded a dramatic reduction in incoming emails, as documented in Table 2. This reduction occurred almost entirely in messages unrelated to the course’s intellectual content and has persisted for four years beyond the initial redesign.

Table 1. Online Basic Communication Course Email Category Scheme

I. Task messages

A. Technical questions

1. Hardware or software: inoperable features, computer crashes, uploading and downloading, etc.

2. Course features: any items specific to the course platform format and functioning.

a. Navigation: where to locate certain features, items, or assignments.

b. Functions: how to use specific course features (e.g., document sharing, webliography, etc.)

3. Electronic communication issues: sending/receiving email/assignments, preparing attachments, etc.

B. Assignments and course content

1. Questions/confusions about assignments or instructions

2. Questions/arguments about assessment of student work (e.g., disputing a grade)

3. Enrichments: applying course material to student’s experience, finding examples of ideas, etc.

4. Evaluating assignments: comments about the level of difficulty, value, or interest of an assignment or activity

5. Off campus accommodations (for exams, make-ups, performances, etc.)

C. Other task messages

1. Student identity issues (verifying unidentified correspondence and assignments)

2. Rescheduling appointments or assignments

D. Enrollment issues (add, drop)

II. Maintenance messages

A. Phatic communication: any ritualistic message that focuses on building student/instructor relationships (e.g., thank you, greetings, congratulations, sympathy, encouragement)

B. Self-disclosure: revealing personal information to others

C. Gossip: rumors or comments about people or things

D. Excuses: providing reasons for not fulfilling a course requirement or doing an assignment (includes requests for extensions of deadlines)

E. Harmonizing: reducing conflict by compromising or by defusing disagreements

F. Aggression: attacking, insulting, or flaming

G. Other maintenance messages

III. Unclassified messages

Note: Categories in italics indicate prioritized targets for instructional adjustment.

Although the specific interventions resulting from the content analysis were somewhat idiosyncratic to the course and its delivery platform, some generalizable insights emerge by briefly summarizing the nature of the changes associated with the email feedback. Specific course components that students identified as user-unfriendly were supplemented in several ways. If the difficulty lay with the learning management system, then students were provided with direct links to both the multimedia tutorial and the university’s help desk at the point of access for that feature. In addition, the instructor provided written step-by-step instructions for using that course feature. If the feature were integral to the course, then students would be provided an interactive tutorial in a preliminary unit whose completion was required before accessing the potentially troublesome course feature. As a result, students had to “pass” (i.e., demonstrate competency in) the sample course feature in order to access the fully functional component within the course.

Navigational confusion and difficulty in locating content received attention by providing students multiple points of access for frequently used course components. Access points to key course areas were placed at locations where, according to student emails, they were already looking for or expecting access to the content they needed. To add clarity to several areas of the course, assignment instructions were revised to make explicit the areas that students reported as sources of confusion. To provide regular reminders about where to access content or when and where to go in the course for various assignments, templates of emails were created and saved, complete with screen shots of the appropriate access points. These email templates could be reused each term, thus bypassing the need to re-create the same email messages multiple times. A similar archive of frequently used feedback can be saved and then inserted on student assignments using macros to quickly generate standard comments that can be customized without requiring reconstruction of the entire text (Nagel & Kotze, 2010).

Finally, some of the more frequent areas of confusion or inquiry were addressed explicitly in a thematically organized Frequently Asked Questions (FAQ) section (Kearns, Frey, Tomer, & Alman, 2014). This area was editable by students so they could continue to enhance the content by building on previous answers. Offloading this type of content from email onto the course site enabled the instructor to communicate to students more specific parameters for the types of topics best suited for emails (Ko & Rossen, 2010).

When the redesigned course (n = 19 students) was offered the next semester with the same instructor and content, incoming student emails decreased 67 percent, with reductions concentrated in the problem areas previously identified. Student reactions to instructional technology may be mediated by their perceptions of the instructor’s credibility (Schrodt & Witt, 2006). Studies that measure reactions to different course delivery methods, therefore, must control for the instructor to avoid the intervening variable of perceived credibility to reduce validity of results. Not only did the current study control for the instructor, but the same course content (textbook, major assignments, and assessments) was maintained in both sections.

Discussion and Implications

In addition to the direct benefit of reducing instructor workload, evidence-based course redesign as described in this study reclaims previously lost learning opportunities. Instead of responding to individual problems resulting from technical, instructional, or navigational confusion, the instructor of the redesigned course can devote more time on task by intensifying and personalizing the course content. Students also recover lost opportunity costs by repurposing the time spent muddling over how to use course tools or find content. Instead, they can devote that time toward more productive tasks such as delving deeper into the subject matter and more thoroughly preparing their assignments.

Probably because so much of the earlier research on distance education consisted of simple comparison studies juxtaposing online with face-to-face course delivery, results were attributed to the use of technology with minimal attention to the instructional design of the course or the instructional uses of a most flexible tool, the Web. If there is one lesson the current study holds, it is that one cannot evaluate the electronic technology separately from the instructional uses made of it. Smith and Dillon (1999) call this problem the media/method confound, and it provides a clear label for the interrelationship between technology and instructional design. In other words, it is not the technology per se that has an effect, but rather the way teachers and students use it. Meyer (2002) concurs that too many studies attribute results to instructional technology per se without reflecting on how the technology is used in ways that might inhibit or enhance learning.

As amply documented, a major supposed drawback of teaching online is the deluge of emails from students. The double standard underlying such complaints is curious. Rarely if ever do instructors complain about too much student participation in the classroom or students who are too eager to discuss the course outside of class with the instructor. Yet when online students exhibit exactly the same participatory behaviors that would signify initiative and interest in a traditional classroom setting, their electronic communication becomes burdensome and unmanageable. This reaction may tell more about the instructor’s attitude toward computer-mediated communication than about the nature of online education.

Complaints about excessive emails also miss a fundamental point: student communication always sends a meta-message. If an instructor is inundated with student emails, it would be wise to examine the nature of the messages to determine whether they send important signals about the design of the course or the quality and availability of instructional resources. The point is not simply that students are sending emails, but rather what those emails communicate about the student’s educational experience. The initial offering of an online course involves much guesswork since only experience will show how students actually use and respond to the course materials. Instead of using incoming student emails as a source of complaints about online education, these emails became the basis for systematic course improvement. If most of the emails pose inquiries about how to navigate the course site or how to interpret instructions, then they send a clear cue to reorganize the course site or rephrase assignment guidelines. Is excessive email simply part of the burden of online instruction, or can the email deluge be stemmed by analyzing message content and using it as a stimulus for online course improvement? This question lies at the heart of resolving the media/method confound.

References

Cavanaugh, J. (2005). Teaching online—a time comparison. Online Journal of Distance Learning Administration, 8(1). Retrieved from https://www.westga.edu/~distance/ojdla/spring81/cavanaugh81.htm

Cohen, N. L., Carbone, E. T., & Beffa-Negrini, P. A. (2011). The design, implementation, and evaluation of online credit nutrition courses: A systematic review. Journal of Nutrition Education and Behavior, 43(2), 76-86.

Kearns, L. R., Frey, B. A., Tomer, C., & Alman, S. (2014). A study of personal information management strategies for online faculty. Journal of Asynchronous Learning Networks, 18(1), 19-35.

Ko, S., & Rossen, S. (2010). Teaching online: A practical guide. New York: Routledge.

Lee, K. S. (2004). Web-based courses for all disciplines: How?. Journal of Educational Media and Library Sciences, 41(4), 437-447.

Meyer, K. A. (2002). Quality in distance education: Focus on on-line learning. ASHE-ERIC Higher Education Report, 29(4). San Francisco: Jossey-Bass.

Nagel, L., & Kotze, T. G. (2010). Supersizing e-learning: What a COI survey reveals about teaching presence in a large online class. Internet and Higher Education, 13(1-2), 45-51.

Okita, S. Y., & Schwartz, D. L. (2013). Learning by teaching human pupils and teachable agents: The importance of recursive feedback. Journal of the Learning Sciences, 22(3), 375-412.

Productivity expert Kimberly Medlock says email wastes 2,000 hours a year at average office. (2012, June 5). PR Newswire US.

Robles, M., & Braathen, S. (2002). Online assessment techniques. Delta Pi Epsilon Journal, 44(1), 39-49.

Russell, T. L. (2001) The no significant difference phenomenon: A comparative research annotated bibliography on technology for distance education (5th ed.). Montgomery, AL: IDECC.

Schrodt, P., & Witt, P. L. (2006). Students’ attributions of instructor credibility as a function of students’ expectations of instructional technology use and nonverbal immediacy. Communication Education, 55, 1-20.

Schwartzman, R., & Carlone, D. (2010). Online teaching as virtual work in the new (political) economy. In S. D. Long (Ed.), Communication, relationships, and practices in virtual work (pp. 46-67). Hershey, PA: IGI Global.

Smith, P. & Dillon, C. (1999). Comparing distance learning and classroom learning: Conceptual considerations. The American Journal of Distance Education 13(2), 6-23. Retrieved from http://www.ajde.com/contents/vol13_2.htm

Spector, M. J. (2005). Time demands in online instruction. Distance Education, 26(1), 5-27.